Closing the Gap by Optimising External Images with Cloudflare Workers and Cloudinary

When it comes to sustainability, it’s all well and good building an amazing website where the templates are well optimised, minimising the download size of images, css and javascript that are baked in. But if a content editor then subsequently adds in actual content filled with huge and poorly optimised images, the good work can be very quickly undone. This isn’t usually going to be something they do deliberately, but rather that comes about just as a consequence of often not even realising the size of the images and its impact, especially when they’re often on high speed connections now.

Dealing with large images uploaded within Umbraco itself has traditionally been something a lot easier to control, as you can use the cropper datatype to ensure that only smaller elements are stored, or easily just add querystrings to the end of a lot of image URLs at view output time to have them automatically resized and converted to optimised modern formats like WebP using the built in ImageSharp.Web functionality. However all of these only work great when the images are also on the same website the solution is being hosted on.

What I’m going to look here is a way to tackle that more annoying problem where someone is linking out to images from somewhere else (and I mean where they are permitted to – not when they’re hotlinking to some poor other website owner without their knowledge). This tends to be more of an issue with freeform content elements like Rich Text Editors and Mark Down Editors. In the case of Rich Text Editors, you can’t normally directly choose external images through the UI. However I’ve tended to find they still creep in sometimes if a user has copy/pasted in content from something they’d previously written elsewhere. For example a blog, social media or even from an older copy of the website pages, common during new builds when editors are looking for the fastest way possible to bring content over from a different system. And this copying often brings along the full original external image URL embedded with it. I mean if it looks fine on the screen and works, why would they then replace it? You could work in ways to block this, but I decided why not just find a way to transparently optimise any external image URLs too, most probably without them even realising?

My solution for this hinges on the combined use of Cloudflare Workers with Cloudinary. Cloudflare Workers are Cloudflare’s way of allowing you to hook scripts onto your own URLs at their CDN edge, intercepting the address then using them to transparently execute some Javascript server-side. Cloudinary is an external image optimising service which does a lot of clever optimising of images, and has a very generous free tier. Particularly useful for this though, Cloudinary allows you to optimise any image URL simply by tagging the existing URL onto the end of your account domain, effectively creating a simple image proxy service.

Getting Cloudinary Working

First of all, ensure you have an account with Cloudinary set up. This should then generate you a base URL of the form https://res.cloudinary.com/*your_identifier*/ . Everything after this in the URL is then a parameter string, and there are multiple ways of setting this up using some well documented parameters from the site. However I’ve found just a fairly simple set of options can get some excellent results from their intelligent optimiser. My resulting URLs are of the form:-

https://res.cloudinary.com/*your_identifier*/image/fetch/q_auto:good,f_auto/https://original.image.example.com/image.png

The two simple parameters ‘q_auto:good’ for ‘quality auto good’ and ‘f_auto’ for ‘format auto’ catches most of the common culprits for poorly optimised images while also in my own tests being very good at making intelligent decisions. It can work out, for example, when to convert those photos someone has originally saved as a huge PNG rather than a JPG down to a lossy WebP. While also being able to work out where that simple graphic file which was correctly using PNG can still be transcoded further to a Lossless WebP rather than a Lossy WebP.

Bringing in Cloudflare Workers

Technically the above is all that is needed, and you could use those Cloudinary URLs directly. So where do Cloudflare Workers come in? Well the URLs, as can be seen, are quite ugly with all those parameters included. Also as generous as the Cloudinary free tier is, you can still run through it if you have a lot of images or traffic. And if you attempt to pass an image greater than 10mb in, it’ll just fail with a 400 error due to limits on the free tier.

Cloudflare workers can be used to hook on to URLs on our own domain, doing some checks before chaining out to Cloudinary and returning the results but also giving options to cache them within Cloudflare so you don’t need to call out to the external service as often. With Cloudflare’s generous free tier for Workers, allowing 100,000 worker requests per day, you can combine these two to make it even better.

First if you’re not already using Cloudflare, you’ll need to sign up for an account, and then go through the process to create a new Page Worker and bind it to your domain. Their getting started docs are very good and can help with all this initial setup.

Once in, in my case, I decided on the following logic for the Cloudflare Worker:-

1) Bind the worker in Cloudflare to https://mydomain.com/optimizer/. By using a subfolder URL, it allows the rest of the domain addresses to continue acting as normal.

2) When anything accesses a URL starting https://mydomain.com/optimizer/ check does the URL then end in .png, .jpg, or .gif (I left out WebP deliberately, as likely anyone using this format is already optimising them well and there’d be little point optimising further)

3) If so, make a fetch HEAD call out to the original image URL (ie https://original.image.example.com/image.png) to check its size. If it is greater than 10mb, return a 404. I chose a 404 here, but you could of course return anything different here, such as a different ‘image too big’ holding image. This check is done as Cloudinary’s free tier does not allow bringing in an image larger than this.

4) If it’s less than 10mb, make a fetch GET call out to https://res.cloudinary.com/*your_identifier*/image/fetch/q_auto:good,f_auto/https://original.image.url/image.png

5) Return the resulting optimised image from the worker, complete with Cloudflare cache headers for future accesses. I’ve also added no-index robots headers as I’d rather Google wasn’t indexing the images on these URLs instead of the original, but that’s an optional step.

There will, of course, still be some calls to the large images going round in the background between servers when following this approach. But they aren’t delivered to the end user, and also should only be happening on the first accesses before being cached for subsequent users.

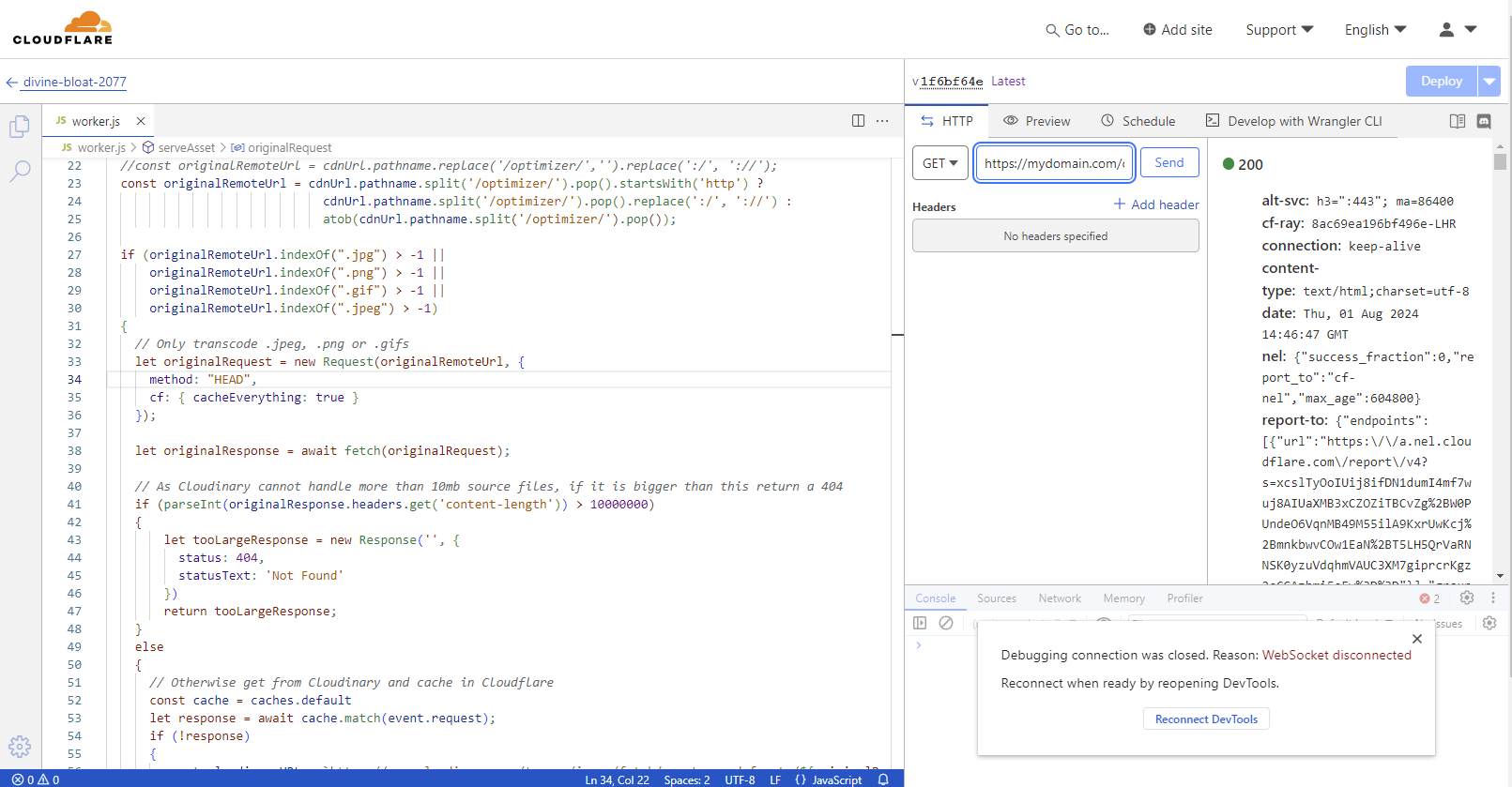

The resultant Javascript code for this in the Cloudflare Worker ended up like the following:-

addEventListener('fetch', event => {

event.respondWith(handleRequest(event));

});

async function handleRequest(event) {

// Only handle this if its a GET request

if (event.request.method === 'GET') {

let response = await serveAsset(event);

if (response.status > 399) {

response = new Response(response.statusText, { status: response.status });

}

return response;

}

return new Response('Method not allowed', { status: 405 });

}

async function serveAsset(event) {

// Check if the URL contains a remote URL in plaintext, or is base64 encoded

const cdnUrl = new URL(event.request.url);

const originalRemoteUrl = cdnUrl.pathname.split('/optimizer/').pop().startsWith('http') ?

cdnUrl.pathname.split('/optimizer/').pop().replace(':/', '://') :

atob(cdnUrl.pathname.split('/optimizer/').pop());

if (originalRemoteUrl.indexOf(".jpg") > -1 ||

originalRemoteUrl.indexOf(".png") > -1 ||

originalRemoteUrl.indexOf(".gif") > -1 ||

originalRemoteUrl.indexOf(".jpeg") > -1)

{

// Only transcode .jpeg, .png or .gifs

let originalRequest = new Request(originalRemoteUrl, {

method: "HEAD",

cf: { cacheEverything: true }

});

let originalResponse = await fetch(originalRequest);

// As Cloudinary cannot handle more than 10mb source files, if it is bigger than this return a 404

if (parseInt(originalResponse.headers.get('content-length')) > 10000000)

{

let tooLargeResponse = new Response('', {

status: 404,

statusText: 'Not Found'

})

return tooLargeResponse;

}

else

{

// Otherwise get from Cloudinary and cache in Cloudflare

const cache = caches.default

let response = await cache.match(event.request);

if (!response)

{

const cloudinaryURL = `https://res.cloudinary.com/*your_identifier*/image/fetch/q_auto:good,f_auto/${originalRemoteUrl}`;

response = await fetch(cloudinaryURL, { headers: event.request.headers })

const headers = new Headers(response.headers);

headers.set("cache-control", `public, max-age=7200`);

headers.set("vary", "Accept");

headers.set("X-Robots-Tag", "noindex");

response = new Response(response.body, { ...response, headers });

event.waitUntil(cache.put(event.request, response.clone()))

}

return response;

}

}

else

{

// If not a .gif, .jpeg or .png, pass through the original file untouched

let originalRequest = new Request(originalRemoteUrl, {

method: "GET",

cf: { cacheEverything: true }

});

return await fetch(originalRequest);

}

}

After doing that, any accesses to https://mydomain.com/optimizer/https://original.image.url/image.png should return a nicely optimised image. As a little side feature, I also added support for Base64 encoding the original image URL to the worker code so https://mydomain.com/optimizer/aHR0cHM6Ly9vcmlnaW5hbC5pbWFnZS51cmwvaW1hZ2UucG5n would also get the same image, and means only someone who knows what Base64 is can easily see the original. But that’s not going to be used in the rest of this example.

Getting it Back Into Umbraco

The transparent proxy all works fine, but it still requires you to have your custom domain prefixing the original URL in the markup for it to kick in. To do this, I first created a simple string extension method. You pass in a string containing some HTML (and some overloads for HtmlEncodedString and HtmlString too). It’ll scan through for image tags and update the URLs for these before returning the updated HTML using the HtmlAgilityPack package.

using Microsoft.AspNetCore.Html;

using HtmlAgilityPack;

using NUglify.Helpers;

using Umbraco.Cms.Core.Strings;

namespace UmbracoScrub13.Extensions

{

public static class TextExtensions

{

public static HtmlEncodedString ReplaceImages(this HtmlEncodedString htmlEncodedString)

{

return new HtmlEncodedString(htmlEncodedString.ToHtmlString().ReplaceImages());

}

public static HtmlString ReplaceImages(this HtmlString contentString)

{

return new HtmlString(contentString.Value);

}

public static string ReplaceImages(this string contentString)

{

// Search for external images and replace

var baseUrl = "https://mydomain.com/optimizer/"; // Example only. This can be moved to config

HtmlDocument htmlDocument = new HtmlDocument();

htmlDocument.LoadHtml(contentString);

htmlDocument

.DocumentNode

.Descendants("img")

.Where(image => image.GetAttributeValue("src", "").StartsWith("http"))

.ForEach(image =>

{

var imageSrc = image.GetAttributeValue("src", "");

imageSrc = $"{baseUrl}{imageSrc}";

image.SetAttributeValue("src", imageSrc);

});

contentString = htmlDocument.DocumentNode.OuterHtml;

return contentString;

}

}

}

That extension method can then be applied to wherever Rich Text editors, or markdown are output in the View Markup by simply doing:-

@Model.MyPropertyName.ReplaceImages()

Ensuring any using namespace statements are called in at the top of the view too. This approach does however require replacements to be done in multiple views which, depending on the size of the project, can become difficult to track and maintain.

A more global approach is to create your own Property Value Converter for Rich Text and Markdown editors and call the newly created replacement method from there. This means it’ll then happen automatically whenever you output the strongly typed value in the code. As covered on the previous blog post, the exact amount of code needed for doing this varies a little for Rich Text Editors versus Markdown Editors, due to the larger complexity of the former. However, I’ve included a demo of the code for both below using the original Umbraco core converters as a base, as it functions within Umbraco 13. Umbraco 14 requires some changes for the Rich Text Editor code mainly, as they’ve refactored references to ‘RteMacro’ to ‘RteBlock’, and reduced the number of constructors to 1.

Rich Text Editor – CustomRteMacroRenderingValueConverter.cs

using Microsoft.Extensions.Options;

using Umbraco.Cms.Core.Blocks;

using Umbraco.Cms.Core.Configuration.Models;

using Umbraco.Cms.Core.Macros;

using Umbraco.Cms.Core.Models.PublishedContent;

using Umbraco.Cms.Core.Strings;

using Umbraco.Cms.Core.Templates;

using Umbraco.Cms.Core.Web;

using Umbraco.Cms.Core.DeliveryApi;

using Umbraco.Cms.Core.Serialization;

using Umbraco.Cms.Core.PropertyEditors;

using UmbracoScrub13.Extensions;

using Umbraco.Cms.Core.PropertyEditors.ValueConverters;

namespace UmbracoScrub13.PropertyValueConverters

{

public class CustomRteMacroRenderingValueConverter : RteMacroRenderingValueConverter

{

public CustomRteMacroRenderingValueConverter(IUmbracoContextAccessor umbracoContextAccessor, IMacroRenderer macroRenderer, HtmlLocalLinkParser linkParser, HtmlUrlParser urlParser, HtmlImageSourceParser imageSourceParser) : base(umbracoContextAccessor, macroRenderer, linkParser, urlParser, imageSourceParser)

{

}

public CustomRteMacroRenderingValueConverter(IUmbracoContextAccessor umbracoContextAccessor, IMacroRenderer macroRenderer, HtmlLocalLinkParser linkParser, HtmlUrlParser urlParser, HtmlImageSourceParser imageSourceParser, IApiRichTextElementParser apiRichTextElementParser, IApiRichTextMarkupParser apiRichTextMarkupParser, IOptionsMonitor<DeliveryApiSettings> deliveryApiSettingsMonitor) : base(umbracoContextAccessor, macroRenderer, linkParser, urlParser, imageSourceParser, apiRichTextElementParser, apiRichTextMarkupParser, deliveryApiSettingsMonitor)

{

}

public CustomRteMacroRenderingValueConverter(IUmbracoContextAccessor umbracoContextAccessor, IMacroRenderer macroRenderer, HtmlLocalLinkParser linkParser, HtmlUrlParser urlParser, HtmlImageSourceParser imageSourceParser, IApiRichTextElementParser apiRichTextElementParser, IApiRichTextMarkupParser apiRichTextMarkupParser, IPartialViewBlockEngine partialViewBlockEngine, BlockEditorConverter blockEditorConverter, IJsonSerializer jsonSerializer, IApiElementBuilder apiElementBuilder, RichTextBlockPropertyValueConstructorCache constructorCache, ILogger<Umbraco.Cms.Core.PropertyEditors.ValueConverters.RteMacroRenderingValueConverter> logger, IOptionsMonitor<DeliveryApiSettings> deliveryApiSettingsMonitor) : base(umbracoContextAccessor, macroRenderer, linkParser, urlParser, imageSourceParser, apiRichTextElementParser, apiRichTextMarkupParser, partialViewBlockEngine, blockEditorConverter, jsonSerializer, apiElementBuilder, constructorCache, logger, deliveryApiSettingsMonitor)

{

}

public override object ConvertIntermediateToObject(IPublishedElement owner, IPublishedPropertyType propertyType,

PropertyCacheLevel referenceCacheLevel, object? inter, bool preview)

{

var html = (HtmlEncodedString)base.ConvertIntermediateToObject(owner, propertyType, referenceCacheLevel, inter, preview);

return html.ReplaceImages();

}

}

}

Markdown Editor – CustomMarkdownEditorValueConverter.cs

using HeyRed.MarkdownSharp;

using Umbraco.Cms.Core;

using Umbraco.Cms.Core.Models.PublishedContent;

using Umbraco.Cms.Core.PropertyEditors;

using Umbraco.Cms.Core.Strings;

using Umbraco.Cms.Core.Templates;

using UmbracoScrub13.Extensions;

namespace UmbracoScrub13.PropertyValueConverters

{

public class CustomMarkdownEditorValueConverter : PropertyValueConverterBase

{

private readonly HtmlLocalLinkParser _localLinkParser;

private readonly HtmlUrlParser _urlParser;

public CustomMarkdownEditorValueConverter(HtmlLocalLinkParser localLinkParser, HtmlUrlParser urlParser)

{

_localLinkParser = localLinkParser;

_urlParser = urlParser;

}

public override bool IsConverter(IPublishedPropertyType propertyType)

=> propertyType.EditorAlias == Constants.PropertyEditors.Aliases.MarkdownEditor;

public override Type GetPropertyValueType(IPublishedPropertyType propertyType)

=> typeof(IHtmlEncodedString);

public override PropertyCacheLevel GetPropertyCacheLevel(IPublishedPropertyType propertyType)

=> PropertyCacheLevel.Element;

public override object? ConvertSourceToIntermediate(IPublishedElement owner, IPublishedPropertyType propertyType, object? source, bool preview)

{

if (source == null)

{

return null;

}

var sourceString = source.ToString()!;

// ensures string is parsed for {localLink} and URLs are resolved correctly

sourceString = _localLinkParser.EnsureInternalLinks(sourceString, preview);

sourceString = _urlParser.EnsureUrls(sourceString);

return sourceString;

}

public override object ConvertIntermediateToObject(IPublishedElement owner, IPublishedPropertyType propertyType, PropertyCacheLevel referenceCacheLevel, object? inter, bool preview)

{

// convert markup to HTML for frontend rendering.

// source should come from ConvertSource and be a string (or null) already

var mark = new Markdown();

var html = (inter == null ? string.Empty : mark.Transform((string)inter)).ReplaceImages();

return new HtmlEncodedString(html);

}

}

}

And with that you have a way of closing the gap on those external images too. It’s a very different approach to a problem, which may useful to others, or which may not. But it’s been a fun experiment for me either way.